|

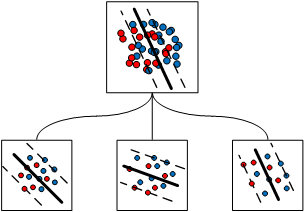

Kernel support vector machine are commonly used in machine learning applications for Internet of Things (IoT). As data generated by IoT devices increase dramatically, centralized training approaches that upload the devices' local data to a central place can consume large scale network bandwidth. Thus, distributed training approaches that only upload local computational results to the central place are promising for kernel SVM. However, for the existing distributed training approaches, many redundant data are included in the local computational results, leading to unnecessary bandwidth consumption. Hence, a new algorithm called RR-DKSVM is developed to reduce the redundant data. In this algorithm, a local SVM model is first trained with an increased penalty parameter over the local data at each device. The support vectors of the local model, i.e., a small part of the local data samples, are uploaded to a cloud server where a global SVM model is trained over the support vectors collected from the devices. The global model is further improved by an iterative process. In each iteration, the global model is broadcast to all the devices to discover the local data samples that have not been uploaded but can change the global model. These samples are then uploaded to the cloud server to update the global model. It is proved that the global model trained by RR-DKSVM can converge to the optimal SVM model. The experimental results show that RR-DKSVM can increase the communication efficiency by up to 17% compared with the existing approaches.

People:

Xiaochen Zhou and Jiawei Zhang

Publication:

X. Zhou, J. Zhang, S. Zhang, X. Wang, "Redundancy Reducing Distributed Kernel Support Vector Machines for IoT", submitted to IEEE Transactions on Cybernetics.

|